实验室学习——图像融合总结报告

1.实验内容

图像融合

2.学习步骤

1)配置环境,实现opencv程序的编译

①下载opencv4.1.2和opencv_contrib4.1.2的安装压缩包

网址:https://github.com/opencv/opencv/releases

https://github.com/opencv/opencv_contrib/releases

②下载添加依赖包

#sudo apt-get install build-essential

#sudo apt-get install cmake git libgtk2.0-dev pkg-config libavcodec-#dev libavformat-dev libswscale-dev

#sudo apt-get install python-dev python-numpy libtbb2 libtbb-dev libjpeg-#dev libpng-dev libtiff-dev libjasper-dev libdc1394-22-dev

#sudo add-apt-repository "deb http://security.ubuntu.com/ubuntu xenial-security main"

#sudo apt update

#sudo apt install libjasper1 libjasper-dev

③解压

解压压缩包,将contrib放进opencv4文件夹中,并新建一个build文件夹,进入解压出的文件夹打开终端,然后输入

#cmake -D CMAKE_BUILD_TYPE=Release -D CMAKE_INSTALL_PREFIX=/usr/local -D OPENCV_EXTRA_MODULES_PATH=/home/(你的计算机名)/opencv-4.0.1/opencv_contrib-4.0.1/modules/ ..

#sudo make

#sudo make install

2)实现opencv程序的编译与运行

①下载Visual Studio Code

②新建一个文件夹,文件夹中创建4个子文件夹,分别为bin(放执行文件),build(用于编译),include,src(cpp文件夹)

方法一:

③在主文件夹中创建一个CMakeLists.txt文件,编辑代码为

cmake_minimum_required(VERSION 3.10)

project(main)

find_package(OpenCV 4.1.0 REQUIRED)

set(CMAKE_RUNTIME_OUTPUT_DIRECTORY ../bin/)

add_compile_options(-std=c++11)

include_directories(include)

include_directories(${OpenCV_INCLUDE_DIRS})

aux_source_directory(./src SOURCES)

add_executable(main ${SOURCES})

target_link_libraries(main ${OpenCV_LIBS})

④进入build文件,

#cmake ..

#make

⑤进入bin文件

#./main

方法二:

③在主文件夹下创建一个Makefile文件,编辑为

INCLUDES = -I./usr/local/include/opencv4 -I.//找到自己opencv的文件路径

LIBS = -L./lib \

-lopencv_core \

-lopencv_imgproc \

-lopencv_highgui \

-lopencv_imgcodecs

//根据所需头文件,添加-lopencv_(文件名)\

CXXFLAGS = -g -Wall -o0

OUTPUT = ./bin/main

HEADERS =

SRCS = $(wildcard *.cpp)

OBJS = $(SRCS:.cpp = .o)

CXX = g++

all:$(OUTPUT)

$(OUTPUT) : $(OBJS)

$(CXX) -std=c++11 $^ -o $@ $(INCLUDES) $(LIBS)

%.o : %.cpp $(HEADERS)

$(CXX) -std=c++11 -c $< $(CXXFLAGS)

.PHONY:clean

clean:

rm -rf *.out *.oprocess

④在主文件下输入

#make

⑤进入bin文件夹,输入

#./main

3)对opencv基本操作的学习

①图像读取,显示与写入

Mat inputimage;//变量声明

inputimage=imread("图片文件名路径");//图像读取进变量当中

imshow("name",inputimage);//图像显示

imwrite(".jpg",inputimage);//图像写入文件

waitKey();

②创建窗口显示

namedWindow("name",0);//参数0表示窗口大小可调整;使用参数WINDOW_AUTOSIZE窗口将会与载入图像大小一致

imshow("name",inputimage);

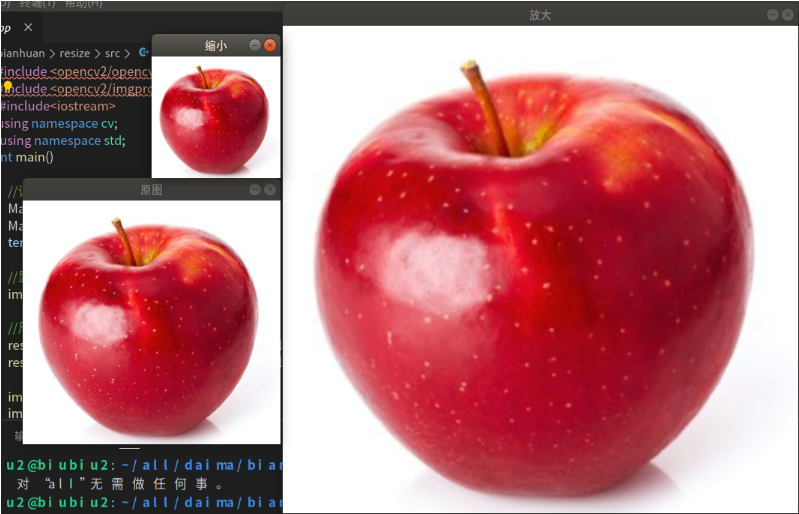

③resize()图像大小转换

void resize(InputArray src, OutputArray dst, Size dsize, double fx=0, double fy=0, int interpolation=INTER_LINEAR)

//dsize和fx、fy不能同时为0。fx、fy是沿x轴和y轴的缩放系数;默认取0时,计算如下

fx=(double)dsize.width/src.cols

fy=(double)dsize.height/src.rows

//当dsize=0时:dsize=Size(round(fx*src.cols),round(fy*src.rows));

//尝试代码

#include <opencv2\opencv.hpp>

#include <opencv2\imgproc\imgproc.hpp>

using namespace cv;

int main()

{

//读入图像

Mat srcImage=imread("..\\1.jpg");

Mat temImage,dstImage1,dstImage2;

temImage=srcImage;

imshow("1.jpg",srcImage);//显示原图 resize(temImage,dstImage1,Size(temImage.cols/2,temImage.rows/2),0,0,INTER_LINEAR);//缩小一倍 resize(temImage,dstImage2,Size(temImage.cols*2,temImage.rows*2),0,0,INTER_LINEAR); //放大一倍

imshow("name",dstImage1);

imshow("name",dstImage2);

waitKey();

return 0;

}

④circle函数

circle(Mat &img,Point center,int radius,Scalar color,int thickness=1)

//img为图像,单通道多通道都行,不需要特殊要求

//center为画圆的圆心坐标

//radius为圆的半径

//color为设定圆的颜色,比如用CV_RGB(255, 0,0)设置为蓝色, CV_RGB(255, 255,255)设置为白色,CV_RGB(0, 0,0)设置为黑色

//thickness为设置圆线条的粗细,值越大则线条越粗,为负数则是填充效果

⑤鼠标响应函数$setMouseCallback()$

void setMousecallback(const string& winname, MouseCallback onMouse, void* userdata=0)

//winname:窗口的名字

//onMouse:鼠标响应函数,回调函数。指定窗口里每次鼠标时间发生的时候,被调用的函数指针。 这个函数的原型应该为void on_Mouse(int event, int x, int y, int flags, void* param);

//userdate:传给回调函数的参数

void on_Mouse(int event, int x, int y, int flags, void* param);

//event是 CV_EVENT_*变量之一

//x和y是鼠标指针在图像坐标系的坐标(不是窗口坐标系)

//flags是CV_EVENT_FLAG的组合, param是用户定义的传递到setMouseCallback函数调用的参数。

event;

#define CV_EVENT_MOUSEMOVE 0 //滑动

#define CV_EVENT_LBUTTONDOWN 1 //左键点击

#define CV_EVENT_RBUTTONDOWN 2 //右键点击

#define CV_EVENT_MBUTTONDOWN 3 //中键点击

#define CV_EVENT_LBUTTONUP 4 //左键放开

#define CV_EVENT_RBUTTONUP 5 //右键放开

#define CV_EVENT_MBUTTONUP 6 //中键放开

#define CV_EVENT_LBUTTONDBLCLK 7 //左键双击

#define CV_EVENT_RBUTTONDBLCLK 8 //右键双击

#define CV_EVENT_MBUTTONDBLCLK 9 //中键双击

//代码示例

#include<opencv2/opencv.hpp>

#include<iostream>

using namespace std;

using namespace cv;

#define WINDOW "原图"

Mat g_srcImage,g_dstImage;

void On_mouse(int event, int x, int y, int flags, void* param);

int main()

{

g_srcImage = imread("1.jpg", 1);

imshow(WINDOW, g_srcImage);

setMouseCallback(WINDOW, On_mouse, reinterpret_cast<void*> (&g_srcImage));

waitKey(0);

return 0;

}

void On_mouse(int event, int x, int y, int flags, void*param)

{

Mat *im = reinterpret_cast<Mat*>(param);

if (event == EVENT_LBUTTONDOWN)

{

circle(*im,Point(x,y),3,Scalar(0,0,255),-1);

}

imshow(WINDOW,*im);

}

⑥仿射变换和透视变换

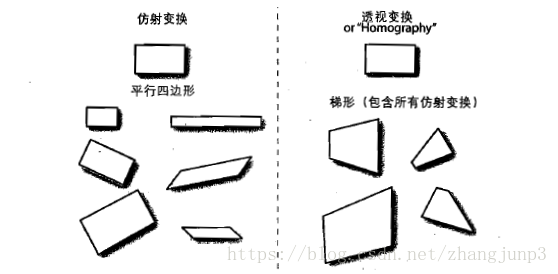

对于平面区域,有两种方式的几何转换:一种是基于2×3矩阵进行的变换,叫仿射变换;另一种是基于3×3矩阵进行的变换,叫透视变换或者单应性映射。关于仿射变换和透射变换的矩阵变换,这篇博文不做重点讨论,因为图像本质就是矩阵,对矩阵的变换就是对图像像素的操作,很简单的数学知识。

仿射变换可以形象的表示成以下形式。一个平面内的任意平行四边形ABCD可以被仿射变换映射为另一个平行四边形A’B’C’D’。通俗的解释就是,可以将仿射变换想象成一幅图像画到一个胶版上,在胶版的角上推或拉,使其变形而得到不同类型的平行四边形。相比较仿射变换,透射变换更具有灵活性,一个透射变换可以将矩形转变成梯形。如下图:

仿射变换:

Point2f srcTriangle[3];

Point2f dstTriangle[3];//声明两个Point2f类型(即转换点)

//定义两组点

Mat warpMat(2, 3, CV_32FC1);//矩阵声明

warpMat = getAffineTransform(srcTriangle, dstTriangle);//仿射矩阵的获取

warpMat = getRotationMatrix2D( Point2f center, double angle, double scale );

//另一种获取方式,center为旋转中心,angle为旋转角度,从而获取旋转矩阵

warpAffine(src, dst, warpMat,size());//进行变换

//例子代码

#include "opencv2/highgui/highgui.hpp"

#include "opencv2/imgproc/imgproc.hpp"

#include <iostream>

#include <stdio.h>

using namespace cv;

using namespace std;

/// 全局变量

char* source_window = "Source image";

char* warp_window = "Warp";

char* warp_rotate_window = "Warp + Rotate";

/** @function main */

int main( int argc, char** argv )

{

Point2f srcTri[3];

Point2f dstTri[3];

Mat rot_mat( 2, 3, CV_32FC1 );

Mat warp_mat( 2, 3, CV_32FC1 );

Mat src, warp_dst, warp_rotate_dst;

/// 加载源图像

src = imread( "1.jpg", 1 );

/// 设置目标图像的大小和类型与源图像一致

warp_dst = Mat::zeros( src.rows, src.cols, src.type() );

/// 设置源图像和目标图像上的三组点以计算仿射变换

srcTri[0] = Point2f( 0,0 );

srcTri[1] = Point2f( src.cols - 1, 0 );

srcTri[2] = Point2f( 0, src.rows - 1 );

dstTri[0] = Point2f( src.cols*0.0, src.rows*0.33 );

dstTri[1] = Point2f( src.cols*0.85, src.rows*0.25 );

dstTri[2] = Point2f( src.cols*0.15, src.rows*0.7 );

/// 求得仿射变换

warp_mat = getAffineTransform( srcTri, dstTri );

/// 对源图像应用上面求得的仿射变换

warpAffine( src, warp_dst, warp_mat, warp_dst.size() );

/** 对图像扭曲后再旋转 */

/// 计算绕图像中点顺时针旋转50度缩放因子为0.6的旋转矩阵

Point center = Point( warp_dst.cols/2, warp_dst.rows/2 );

double angle = -50.0;

double scale = 0.6;

/// 通过上面的旋转细节信息求得旋转矩阵

rot_mat = getRotationMatrix2D( center, angle, scale );

/// 旋转已扭曲图像

warpAffine( warp_dst, warp_rotate_dst, rot_mat, warp_dst.size() );

/// 显示结果

namedWindow( source_window, CV_WINDOW_AUTOSIZE );

imshow( source_window, src );

namedWindow( warp_window, CV_WINDOW_AUTOSIZE );

imshow( warp_window, warp_dst );

namedWindow( warp_rotate_window, CV_WINDOW_AUTOSIZE );

imshow( warp_rotate_window, warp_rotate_dst );

/// 等待用户按任意按键退出程序

waitKey(0);

return 0;

}

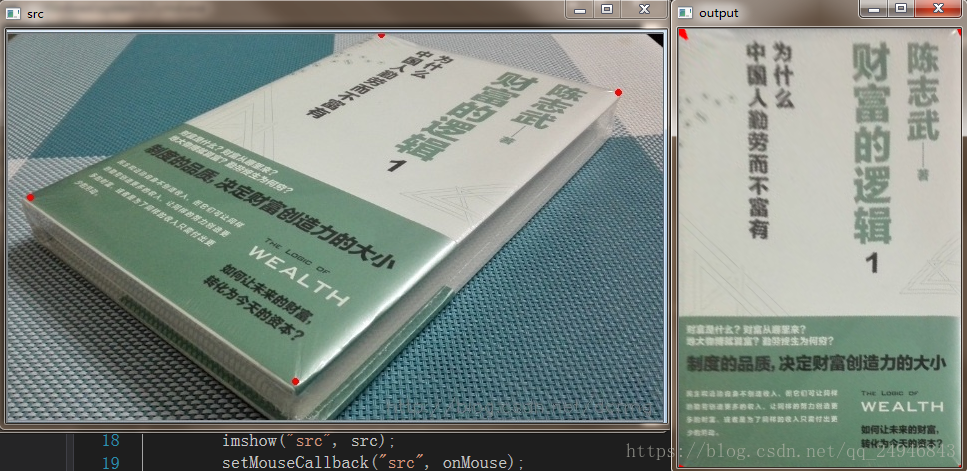

透视变换:

透视变换(Perspective Transformation)是将图片投影到一个新的视平面(Viewing Plane),也称作投影映射(Projective Mapping)。

//计算透视矩阵(通过输入和输出图像中两组点计算透视矩阵):

findHomography( InputArray srcPoints, InputArray dstPoints,OutputArray mask, int method = 0, double ransacReprojThreshold = 3 );

//进行透视变换(利用透视矩阵对图像进行透视变换)(输入原图src,size,变换矩阵,得到结果图dst)

warpPerspective( InputArray src, OutputArray dst,

InputArray M, Size dsize,

int flags = INTER_LINEAR,

int borderMode = BORDER_CONSTANT,

const Scalar& borderValue = Scalar());

//点的透视变换(利用透视矩阵对点进行透视变换):

perspectiveTransform(InputArray src, OutputArray dst, InputArray mask );

//输入原点,和变换矩阵,获得该点在结果图点的位置

#include<opencv2\opencv.hpp>

#include<iostream>

using namespace cv;

using namespace std;

vector<Point2f> srcTri(4);

vector<Point2f> dstTri(4);

int clickTimes = 0; //在图像上单击次数

Mat src,dst;

Mat imageWarp;

void onMouse(int event, int x, int y, int flags, void *utsc);

int main(int argc, char *argv[])

{

src = imread("2.jpg");

namedWindow("src", CV_WINDOW_AUTOSIZE);

imshow("src", src);

setMouseCallback("src", onMouse);

waitKey();

return 0;

}

void onMouse(int event, int x, int y, int flags, void *utsc)

{

if (event == CV_EVENT_LBUTTONUP) //响应鼠标左键事件

{

circle(src, Point(x, y), 2, Scalar(0, 0, 255), 2); //标记选中点

imshow("wait ", src);

srcTri[clickTimes].x = x;

srcTri[clickTimes].y = y;

clickTimes++;

}

if (clickTimes == 4)

{

dstTri[0].x = 0;

dstTri[0].y = 0;

dstTri[1].x = 282;

dstTri[1].y = 0;

dstTri[2].x = 282 ;

dstTri[2].y = 438;

dstTri[3].x = 0;

dstTri[3].y = 438;

Mat H = findHomography(srcTri, dstTri, RANSAC);//计算透视矩阵

warpPerspective(src, dst, H, Size(282, 438));//图像透视变换

imshow("output", dst);

}

}

//从原图中选择一个区域显示到一个固定大小图像中

效果如图:

⑦Rect()

矩形区域类型//可用于选定图像指定区域

Rect(int x,int y,int width,int height);

//x为矩形左上角cols坐标

//y为矩形左上角rows坐标

//width为矩形的宽

//height为矩形的高

一些关于Rect类型的操作

| 操作 | 例子 |

|---|---|

| 默认构造函数 | cv::Rect r |

| 赋值构造函数 | cv::Rect r2(r1) |

| 带参数构造函数 | cv::Rect(x,y,w,h) |

| 原始点和Size构造函数 | cv::Rect(p,sz) |

| 两个角点构造函数 | cv::Rect(p1,p2) |

| 成员函数访问 | r.x,r.y,r.width,r.height |

| 计算面积 | r.erea() |

| 提取左上角点坐标 | r.tl() |

| 提取右下角点坐标 | r.br() |

| 判断点是否在矩阵内 | r.contains(p) |

| 计算两个矩形的交集 | cv::Rect r3=r1&r2 |

| 计算最小面积矩阵包含r1和r2 | cv::Rect r3=r1 |

| 平移矩形,变量为x | cv::Rect r3=r+x |

| 扩展矩形区域,Size | cv::Rect rs=r+s |

4)有关图像融合学习的一些操作

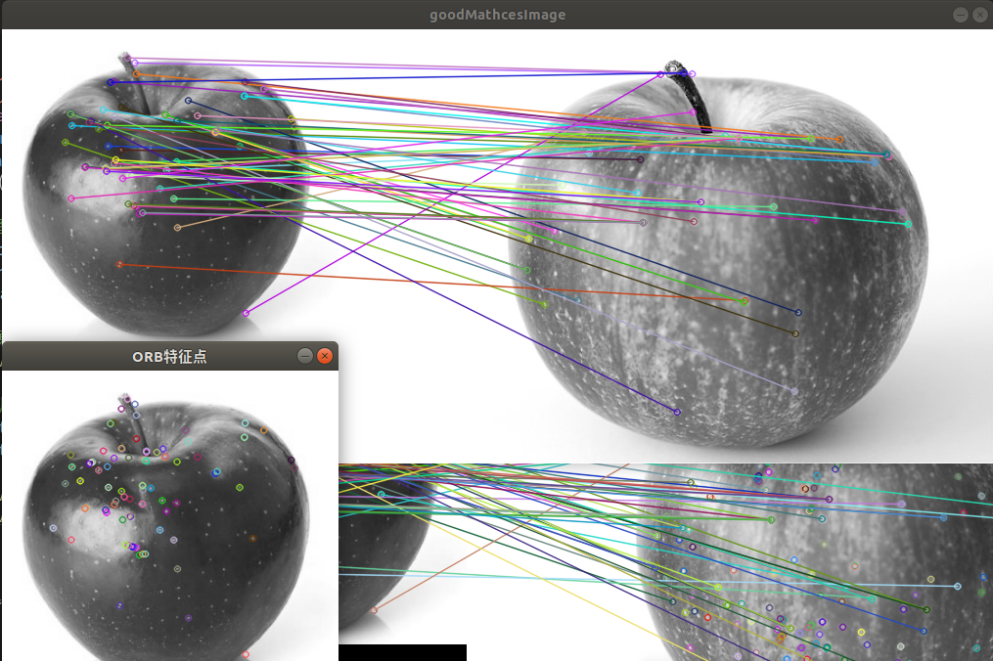

①特征检测与特征匹配方法

一幅图像中总存在着其独特的像素点,这些点我们可以认为就是这幅图像的特征,成为特征点。计算机视觉领域中的很重要的图像特征匹配就是一特征点为基础而进行的,所以,如何定义和找出一幅图像中的特征点就非常重要。

在计算机视觉领域,兴趣点(也称关键点或特征点)的概念已经得到了广泛的应用, 包括目标识别、 图像配准、 视觉跟踪、 三维重建等。 这个概念的原理是, 从图像中选取某些特征点并对图像进行局部分析,而非观察整幅图像。 只要图像中有足够多可检测的兴趣点,并且这些兴趣点各不相同且特征稳定, 能被精确地定位,上述方法就十分有效。

第一步:灰度图

cvtColor(crcImage,srcGrayImage,COLOR_RGB2GRAY);

第二步:keyPoint 的vector的声明

vector<KeyPoint> keypoints_1, keypoints_2;

第三步:提取特征点的声明

Ptr<FastFeatureDector> detector=FastFeatureDector::create();

Ptr<FeatureDetector> detector = ORB::create();

Ptr<AKAZE> detector = AKAZE::create();

第四步:提取获得图像的特征点

detector->detect(src,keypoint_1);

5)画出keypoint参数有DrawMatchesFlags::DRAW_RICH_KEYPOINT

DrawMatchersFlags::DEFAULT

Scalar( , , )表示颜色;Scalar::all(-1)可以产生不同颜色

drawKeypoints(src,keypoint,dst,Scalar(0,0,255),parameter)

6)定义描述子

Mat descriptors_1,descriptors_2;//描述子

Ptr<DescriptorExtractor> descriptor = ORB::create();

descriptor->compute ( src,keypoints, descriptors );

7)直接得到特征点和描述子(AKAZE)

detector->detectAndCompute(src, Mat(), keypoints, descriptors);

8)描述点的匹配

vector<DMatch> matches;//声明匹配

BFMatcher matcher(NORM_L2);//暴力匹配

Ptr<DescriptorMatcher> matcher = DescriptorMatcher::create ( "BruteForce-Hamming" );

matcher->match ( descriptors_1, descriptors_2, matches );

9)显示关键点

drawKeypoints( src, keypoints_1, dst,Scalar::all(-1),DrawMatchesFlags::DEFAULT );

//参数亦可DrawMatchesFlags::DRAW_RICH_KEYPOINTS(标注的特征点用其size的圆表示)

//DrawMatchesFlags::DEFAULT用小圆表示

10)显示关键点匹配

drawMatches ( img_1, keypoints_1, img_2, keypoints_2, matches, img_match );

//例子代码1:

#include <iostream>

#include <opencv2/core/core.hpp>

#include <opencv2/features2d/features2d.hpp>

#include <opencv2/highgui/highgui.hpp>

using namespace std;

using namespace cv;

int main ( int argc, char** argv )

{

//-- 读取图像并且转为灰度图

Mat img_1 = imread ( "pingguo1.png", CV_HAL_DFT_STAGE_COLS );

Mat img_2 = imread ( "pingguo2.jpg", CV_HAL_DFT_STAGE_COLS );

//-- 初始化

std::vector<KeyPoint> keypoints_1, keypoints_2;

Mat descriptors_1, descriptors_2;

Ptr<FeatureDetector> detector = ORB::create();

Ptr<DescriptorExtractor> descriptor = ORB::create();

// Ptr<FeatureDetector> detector = FeatureDetector::create(detector_name);

// Ptr<DescriptorExtractor> descriptor=DescriptorExtractor::create(descriptor_name);

Ptr<DescriptorMatcher> matcher = DescriptorMatcher::create ( "BruteForce-Hamming" );

//-- 第一步:检测 Oriented FAST 角点位置

detector->detect ( img_1,keypoints_1 );

detector->detect ( img_2,keypoints_2 );

//-- 第二步:根据角点位置计算 BRIEF 描述子

descriptor->compute ( img_1, keypoints_1, descriptors_1 );

descriptor->compute ( img_2, keypoints_2, descriptors_2 );

Mat outimg1;

drawKeypoints( img_1, keypoints_1, outimg1, Scalar::all(-1), DrawMatchesFlags::DEFAULT );

imshow("ORB特征点",outimg1);

//-- 第三步:对两幅图像中的BRIEF描述子进行匹配,使用 Hamming 距离

vector<DMatch> matches;

matcher->match ( descriptors_1, descriptors_2, matches );

//-- 第四步:匹配点对筛选

double min_dist=10000, max_dist=0;

//找出所有匹配之间的最小距离和最大距离, 即是最相似的和最不相似的两组点之间的距离

for ( int i = 0; i < descriptors_1.rows; i++ )

{

double dist = matches[i].distance;

if ( dist < min_dist ) min_dist = dist;

if ( dist > max_dist ) max_dist = dist;

}

// 仅供娱乐的写法

min_dist = min_element( matches.begin(), matches.end(), [](const DMatch& m1, const DMatch& m2) {return m1.distance<m2.distance;} )->distance;

max_dist = max_element( matches.begin(), matches.end(), [](const DMatch& m1, const DMatch& m2) {return m1.distance<m2.distance;} )->distance;

printf ( "-- Max dist : %f \n", max_dist );

printf ( "-- Min dist : %f \n", min_dist );

//当描述子之间的距离大于两倍的最小距离时,即认为匹配有误.但有时候最小距离会非常小,设置一个经验值30作为下限.

std::vector< DMatch > good_matches;

for ( int i = 0; i < descriptors_1.rows; i++ )

{

if ( matches[i].distance <= max ( 2*min_dist, 30.0 ) )

{

good_matches.push_back ( matches[i] );

}

}

//-- 第五步:绘制匹配结果

Mat img_match;

Mat img_goodmatch;

drawMatches ( img_1, keypoints_1, img_2, keypoints_2, matches, img_match );

drawMatches ( img_1, keypoints_1, img_2, keypoints_2, good_matches, img_goodmatch );

imshow ( "所有匹配点对", img_match );

imshow ( "优化后匹配点对", img_goodmatch );

waitKey(0);

return 0;

}

//akaze特征提取与匹配

#include <opencv2/opencv.hpp>

#include <iostream>

#include <math.h>

using namespace cv;

using namespace std;

int main()

{

Mat objImage = imread("pingguo1.png", 0);

Mat sceneImage = imread("pingguo2.jpg", 0);

// kaze detection

Ptr<AKAZE> detector = AKAZE::create();

vector<KeyPoint> obj_keypoints, scene_keypoints;

Mat obj_descriptor, scene_descriptor;

double t1 = getTickCount();

// 得到keypoints 和 描述子

detector->detectAndCompute(objImage, Mat(), obj_keypoints, obj_descriptor);

detector->detectAndCompute(sceneImage, Mat(), scene_keypoints, scene_descriptor);

double t2 = getTickCount();

// 使用的时间

double d_kazeTime = 1000 * (t2 - t1) / getTickFrequency();

printf("Use time is: %f\n", d_kazeTime);

// matching

BFMatcher matcher(NORM_L2);

vector<DMatch> matches;

matcher.match(obj_descriptor, scene_descriptor, matches);

// draw matches -key points

Mat akazeMatchesImage;

drawMatches(objImage, obj_keypoints, sceneImage, scene_keypoints, matches, akazeMatchesImage);

imshow("akazeMatchesIamge", akazeMatchesImage);

// good matches

vector<DMatch> goodMatches;

double minDist = 10000, maxDist = 0;

for (size_t i = 0; i < matches.size(); i++)

{

double dist = matches[i].distance;

if (dist < minDist)

minDist = dist;

if (dist > maxDist)

maxDist = dist;

}

for (size_t i = 0; i < matches.size(); i++)

{

double dist = matches[i].distance;

if (dist < max(3 * minDist, 0.02))

goodMatches.push_back(matches[i]);

}

Mat goodMathcesImage;

drawMatches(objImage, obj_keypoints, sceneImage, scene_keypoints, goodMatches, goodMathcesImage,\

Scalar::all(-1), Scalar::all(-1), vector<char>(), DrawMatchesFlags::NOT_DRAW_SINGLE_POINTS);

imshow("goodMathcesImage", goodMathcesImage);

waitKey(0);

return 0;

}

效果示例:

②图像拼接(stitching)

图像拼接在实际的应用场景很广,比如无人机航拍,遥感图像等等,图像拼接是进一步做图像理解基础步骤,拼接效果的好坏直接影响接下来的工作,所以一个好的图像拼接算法非常重要。

实现图像拼接需要5步

1)对每幅图进行特征点的提取

2)对特征点进行匹配

3)进行图像配准

4)把图像拷贝到另一幅图像的特定位置

5)对重叠边界进行特殊处理

下面是opencv自带的stitching融合函数:

#include <iostream>

#include <opencv2/core/core.hpp>

#include <opencv2/highgui/highgui.hpp>

#include <opencv2/imgproc/imgproc.hpp>

#include <opencv2/stitching/stitcher.hpp>

using namespace std;

using namespace cv;

bool try_use_gpu = false;

vector<Mat> imgs;

string result_name = "dst1.jpg";

int main(int argc, char * argv[])

{

Mat img1 = imread(".jpg");

Mat img2 = imread(".jpg");

imshow("p1", img1);

imshow("p2", img2);

if (img1.empty() || img2.empty())

{

cout << "Can't read image" << endl;

return -1;

}

imgs.push_back(img1);

imgs.push_back(img2);

Stitcher stitcher = Stitcher::createDefault(try_use_gpu);

// 使用stitch函数进行拼接

Mat pano;

Stitcher::Status status = stitcher.stitch(imgs, pano);

if (status != Stitcher::OK)

{

cout << "Can't stitch images, error code = " << int(status) << endl;

return -1;

}

imwrite(result_name, pano);

Mat pano2 = pano.clone();

// 显示源图像,和结果图像

imshow("pano", pano);

if (waitKey() == 27)

return 0;

}

opencv自带的融合函数效果比较好,但是取而代之的便是速度比较缓慢。

因此为学习融合的方式,我研究了一下stitching函数的源码,对各种融合步骤的操作更为熟悉

下面是stitching的源码,以及对一些变量函数的分析:

#include <iostream>

#include <fstream>

#include <string>

#include "opencv2/opencv_modules.hpp"

#include <opencv2/core/utility.hpp>

#include "opencv2/imgcodecs.hpp"

#include "opencv2/highgui.hpp"

#include "opencv2/stitching/detail/autocalib.hpp"

#include "opencv2/stitching/detail/blenders.hpp"

#include "opencv2/stitching/detail/timelapsers.hpp"

#include "opencv2/stitching/detail/camera.hpp"

#include "opencv2/stitching/detail/exposure_compensate.hpp"

#include "opencv2/stitching/detail/matchers.hpp"

#include "opencv2/stitching/detail/motion_estimators.hpp"

#include "opencv2/stitching/detail/seam_finders.hpp"

#include "opencv2/stitching/detail/warpers.hpp"

#include "opencv2/stitching/warpers.hpp"

using namespace std;

using namespace cv;

using namespace cv::detail;

// Default command line args

vector<string> img_names;

bool preview = false;

bool try_cuda = false;

double work_megapix = 0.6;//匹配分辨率

double seam_megapix = 0.1;//缝合分辨率

double compose_megapix = -1;//曝光补偿时候分辨率 -1表示使用原始分辨率

float conf_thresh = 1.f;//两幅图来自同一个全景图的置信度

string matcher_type = "homography";

string estimator_type = "homography";//预测参数方法

string ba_cost_func = "ray";//光束平差法损失函数 (no|reproj|ray|affine)

string ba_refine_mask = "xxxxx";

bool do_wave_correct = true;//波形矫正 (no|horiz|vert),默认为水平方向

WaveCorrectKind wave_correct = detail::WAVE_CORRECT_HORIZ;

bool save_graph = false;

std::string save_graph_to;

string warp_type = "spherical";

int expos_comp_type = ExposureCompensator::GAIN_BLOCKS;

int expos_comp_nr_feeds = 1;

int expos_comp_nr_filtering = 2;

int expos_comp_block_size = 32;

string seam_find_type = "gc_color";

int blend_type = Blender::MULTI_BAND;

int timelapse_type = Timelapser::AS_IS;

float blend_strength = 5;// 这里好像是跟融合 下采样之类的数量有关

string result_name = "result.jpg";

bool timelapse = false;

int range_width = -1;

int main()

{

string a=".jpg";

int num_images =2;

vector<Mat> images(num_images);

double work_scale = 1, seam_scale = 1, compose_scale = 1;

bool is_work_scale_set = false, is_seam_scale_set = false, is_compose_scale_set = false;

Ptr<Feature2D> finder=ORB::create();;

Mat full_img, img,full_img1;

vector<ImageFeatures>features(num_images);

vector<Size> full_img_sizes(num_images);//储存每一张图的大小

double seam_work_aspect = 1;

for(int i=0;i<num_images;i++)

{

img_names.push_back(to_string(i)+a);

}

for (int i = 0; i < num_images; ++i)

{

full_img = imread(samples::findFile(img_names[i]));

//resize(full_img1,full_img,Size(full_img1.cols/2,full_img1.rows/2));

full_img_sizes[i] = full_img.size();

if (work_megapix < 0)

{

img = full_img;

work_scale = 1;

is_work_scale_set = true;

}

else

{

if (!is_work_scale_set)

{

work_scale = min(1.0, sqrt(work_megapix * 1e6 / full_img.size().area()));//百万像素

is_work_scale_set = true;

}

resize(full_img, img, Size(), work_scale, work_scale, INTER_LINEAR_EXACT);

}

if (!is_seam_scale_set)

{

seam_scale = min(1.0, sqrt(seam_megapix * 1e6 / full_img.size().area()));//seam缝合

seam_work_aspect = seam_scale / work_scale;

is_seam_scale_set = true;

}

computeImageFeatures(finder, img, features[i]);

//cv::detail::computeImageFeatures (const Ptr< Feature2D > &featuresFinder, InputArray image, ImageFeatures &features, InputArray mask=noArray())//输入方法,图片,得到size,特征点,描述点

features[i].img_idx = i;//指示点

cout<<"Features in image #" << i+1 << ": " << features[i].keypoints.size()<<endl;

resize(full_img, img, Size(), seam_scale, seam_scale, INTER_LINEAR_EXACT);

images[i] = img.clone();

}

full_img.release();

img.release();

//**特征点的匹配

vector<MatchesInfo> pairwise_matches;

Ptr<FeaturesMatcher> matcher;

matcher=makePtr<BestOf2NearestRangeMatcher>(false, try_cuda, 0.3f);

(*matcher)(features, pairwise_matches);

matcher->collectGarbage();

if (save_graph)

{

cout<<"Saving matches graph...";

ofstream f(save_graph_to.c_str());

f << matchesGraphAsString(img_names, pairwise_matches, conf_thresh);

}

vector<int> indices = leaveBiggestComponent(features, pairwise_matches, conf_thresh);

vector<Mat> img_subset;

vector<String> img_names_subset;

vector<Size> full_img_sizes_subset;

for (size_t i = 0; i < indices.size(); ++i)

{

img_names_subset.push_back(img_names[indices[i]]);

img_subset.push_back(images[indices[i]]);

full_img_sizes_subset.push_back(full_img_sizes[indices[i]]);

}

images = img_subset;

img_names = img_names_subset;

full_img_sizes = full_img_sizes_subset;

num_images = static_cast<int>(images.size());

cout<<num_images<<endl;

if (num_images < 2)

{

cout<<"Need more images"<<endl;

return -1;

}

Ptr<Estimator> estimator= makePtr<HomographyBasedEstimator>();

//**相机参数,旋转矩阵的获取

vector<CameraParams> cameras;

if(!(*estimator)(features, pairwise_matches, cameras))

{

cout<<"fail"<<endl;

}

for (size_t i = 0; i < cameras.size(); ++i)

{

Mat R;

cameras[i].R.convertTo(R, CV_32F);

cameras[i].R = R;

cout<<"Initial camera intrinsics #" << indices[i]+1 << ":\nK:\n" << cameras[i].K() << "\nR:\n" << cameras[i].R;

}

Ptr<detail::BundleAdjusterBase> adjuster=makePtr<detail::BundleAdjusterRay>();

adjuster->setConfThresh(conf_thresh);

Mat_<uchar> refine_mask = Mat::zeros(3, 3, CV_8U);

if (ba_refine_mask[0] == 'x') refine_mask(0,0) = 1;

if (ba_refine_mask[1] == 'x') refine_mask(0,1) = 1;

if (ba_refine_mask[2] == 'x') refine_mask(0,2) = 1;

if (ba_refine_mask[3] == 'x') refine_mask(1,1) = 1;

if (ba_refine_mask[4] == 'x') refine_mask(1,2) = 1;

adjuster->setRefinementMask(refine_mask);

(*adjuster)(features, pairwise_matches, cameras);

vector<double> focals;

for (size_t i = 0; i < cameras.size(); ++i)

{

cout<<"Camera #" << indices[i]+1 << ":\nK:\n" << cameras[i].K() << "\nR:\n" << cameras[i].R;

focals.push_back(cameras[i].focal);

}

sort(focals.begin(), focals.end());

float warped_image_scale;

if (focals.size() % 2 == 1)

warped_image_scale = static_cast<float>(focals[focals.size() / 2]);

else

warped_image_scale = static_cast<float>(focals[focals.size() / 2 - 1] + focals[focals.size() / 2]) * 0.5f;

if (do_wave_correct)//。。。

{

vector<Mat> rmats;

for (size_t i = 0; i < cameras.size(); ++i)

rmats.push_back(cameras[i].R.clone());

waveCorrect(rmats, wave_correct);

for (size_t i = 0; i < cameras.size(); ++i)

cameras[i].R = rmats[i];

}

//***前期准备工作后,对图像进行融合

vector<Point> corners(num_images);// 映射之后图像左上角坐标

vector<UMat> masks_warped(num_images);// 映射图像后的掩码

vector<UMat> images_warped(num_images);// 映射变换后图像

vector<Size> sizes(num_images);// 映射后图像尺寸

vector<UMat> masks(num_images);// 原图尺寸

for (int i = 0; i < num_images; ++i)

{

masks[i].create(images[i].size(), CV_8U);

masks[i].setTo(Scalar::all(255));

}

cout<<num_images<<endl;

Ptr<WarperCreator> warper_creator = makePtr<cv::SphericalWarper>();

Ptr<RotationWarper> warper = warper_creator->create(static_cast<float>(warped_image_scale * seam_work_aspect));

for (int i = 0; i < num_images; ++i)

{

Mat_<float> K;// K为相机内参

cameras[i].K().convertTo(K, CV_32F);

float swa = (float)seam_work_aspect;

cout<<swa<<endl;

K(0,0) *= swa; K(0,2) *= swa;

K(1,1) *= swa; K(1,2) *= swa;

// K(0,0) = swa; K(0,2) = 0;

// K(1,1) = swa; K(1,2) = 0;

// 这里用到了相机的内参和外参,得到了变换后图像左上角坐标和变换后图像

corners[i] = warper->warp(images[i], K, cameras[i].R, INTER_LINEAR, BORDER_REFLECT, images_warped[i]);//得到了变换后图像左上角坐标和变换后图像

imshow(to_string(i)+a,images_warped[i]);

sizes[i] = images_warped[i].size();// 映射后图像尺寸

warper->warp(masks[i], K, cameras[i].R, INTER_NEAREST, BORDER_CONSTANT, masks_warped[i]);// 得到了映射后的图像掩码(用于获得图像位置

cout<<"k"<<endl;

}

vector<UMat> images_warped_f(num_images);

for (int i = 0; i < num_images; ++i)

images_warped[i].convertTo(images_warped_f[i], CV_32F);

Ptr<ExposureCompensator> compensator = ExposureCompensator::createDefault(expos_comp_type);

if (dynamic_cast<GainCompensator*>(compensator.get()))

{

GainCompensator* gcompensator = dynamic_cast<GainCompensator*>(compensator.get());

gcompensator->setNrFeeds(expos_comp_nr_feeds);

}

if (dynamic_cast<ChannelsCompensator*>(compensator.get()))

{

ChannelsCompensator* ccompensator = dynamic_cast<ChannelsCompensator*>(compensator.get());

ccompensator->setNrFeeds(expos_comp_nr_feeds);

}

if (dynamic_cast<BlocksCompensator*>(compensator.get()))

{

BlocksCompensator* bcompensator = dynamic_cast<BlocksCompensator*>(compensator.get());

bcompensator->setNrFeeds(expos_comp_nr_feeds);

bcompensator->setNrGainsFilteringIterations(expos_comp_nr_filtering);

bcompensator->setBlockSize(expos_comp_block_size, expos_comp_block_size);

}

compensator->feed(corners, images_warped, masks_warped);

Ptr<SeamFinder> seam_finder= makePtr<detail::GraphCutSeamFinder>(GraphCutSeamFinderBase::COST_COLOR);

seam_finder->find(images_warped_f, corners, masks_warped);

cout<<"k"<<endl;

images.clear();

images_warped.clear();

images_warped_f.clear();

masks.clear();

Mat img_warped, img_warped_s;

Mat dilated_mask, seam_mask, mask, mask_warped;

Ptr<Blender> blender;

Ptr<Timelapser> timelapser;

double compose_work_aspect = 1;

for (int img_idx = 0; img_idx < num_images; ++img_idx)

{

cout<<"Compositing image #" << indices[img_idx]+1<<endl;

// Read image and resize it if necessary

full_img = imread(img_names[img_idx]);

//resize(full_img1,full_img,Size(full_img1.cols/2,full_img1.rows/2));

// imshow(to_string(img_idx+2)+a,full_img);

if (!is_compose_scale_set)

{

if (compose_megapix > 0)

compose_scale = min(1.0, sqrt(compose_megapix * 1e6 / full_img.size().area()));

is_compose_scale_set = true;

// Compute relative scales

//compose_seam_aspect = compose_scale / seam_scale;

compose_work_aspect = compose_scale / work_scale;

// Update warped image scale

warped_image_scale *= static_cast<float>(compose_work_aspect);

warper = warper_creator->create(warped_image_scale);

// Update corners and sizes

for (int i = 0; i < num_images; ++i)

{

// Update intrinsics

cameras[i].focal *= compose_work_aspect;

cameras[i].ppx *= compose_work_aspect;

cameras[i].ppy *= compose_work_aspect;

// Update corner and size

Size sz = full_img_sizes[i];

if (std::abs(compose_scale - 1) > 1e-1)

{

sz.width = cvRound(full_img_sizes[i].width * compose_scale);

sz.height = cvRound(full_img_sizes[i].height * compose_scale);

}

Mat K;

cameras[i].K().convertTo(K, CV_32F);

Rect roi = warper->warpRoi(sz, K, cameras[i].R);

corners[i] = roi.tl();

sizes[i] = roi.size();

}

}

if (abs(compose_scale - 1) > 1e-1)

resize(full_img, img, Size(), compose_scale, compose_scale, INTER_LINEAR_EXACT);

else

img = full_img;

full_img.release();

Size img_size = img.size();

Mat K;

cameras[img_idx].K().convertTo(K, CV_32F);

// Warp the current image

warper->warp(img, K, cameras[img_idx].R, INTER_LINEAR, BORDER_REFLECT, img_warped);

// Warp the current image mask

mask.create(img_size, CV_8U);

mask.setTo(Scalar::all(255));

warper->warp(mask, K, cameras[img_idx].R, INTER_NEAREST, BORDER_CONSTANT, mask_warped);

// Compensate exposure

compensator->apply(img_idx, corners[img_idx], img_warped, mask_warped);//apply函数进行曝光补偿:

img_warped.convertTo(img_warped_s, CV_16S);

img_warped.release();

img.release();

mask.release();

//一系列的融合操作

dilate(masks_warped[img_idx], dilated_mask, Mat());

resize(dilated_mask, seam_mask, mask_warped.size(), 0, 0, INTER_LINEAR_EXACT);

mask_warped = seam_mask & mask_warped;

if (!blender && !timelapse)

{

blender = Blender::createDefault(blend_type, try_cuda);

Size dst_sz = resultRoi(corners, sizes).size();

float blend_width = sqrt(static_cast<float>(dst_sz.area())) * blend_strength / 100.f;

if (blend_width < 1.f)

blender = Blender::createDefault(Blender::NO, try_cuda);

else if (blend_type == Blender::MULTI_BAND)

{

MultiBandBlender* mb = dynamic_cast<MultiBandBlender*>(blender.get());

mb->setNumBands(static_cast<int>(ceil(log(blend_width)/log(2.)) - 1.));

}

else if (blend_type == Blender::FEATHER)

{

FeatherBlender* fb = dynamic_cast<FeatherBlender*>(blender.get());

fb->setSharpness(1.f/blend_width);

}

blender->prepare(corners, sizes);

}

else if (!timelapser && timelapse)

{

timelapser = Timelapser::createDefault(timelapse_type);

timelapser->initialize(corners, sizes);

}

//开始融合

// Blend the current image

if (timelapse)

{

timelapser->process(img_warped_s, Mat::ones(img_warped_s.size(), CV_8UC3), corners[img_idx]);

imwrite("gauhi",timelapser->getDst());

cout<<"1"<<endl;

}

else

{

blender->feed(img_warped_s, mask_warped, corners[img_idx]);

}

}

if (!timelapse)

{

Mat result, result_mask;

blender->blend(result, result_mask);

imwrite("ronghe.jpg",result);

}

waitKey();

}

③aruco的使用

1)aruco的简介

一个ArUco marker是一个二进制平方标记,它由一个宽的黑边和一个内部的二进制矩阵组成,内部的矩阵决定了它们的id。黑色的边界有利于快速检测到图像,二进制编码可以验证id,并且允许错误检测和矫正技术的应用。marker的大小决定了内部矩阵的大小。例如,一个4x4的marker由16bits组成。如图:

重点在于:每一个不同的aruco都有一个特定的编码,当在图像当中检测到aruco的时候,我们能得到此aruco的编码以及此aruco在图像中四个角点的位置

2)aruco的检测

给定一个可以看见ArUco marker的图像,检测程序应当返回检测到的marker的列表。每个检测到的marker包括:

- 图像四个角的位置(按照原始的顺序)

- marker的Id

//所需函数,变量的分析

vector< int > markerIds;

//markerIds是检测出的所有maker的id列表

vector< vector<Point2f> > markerCorners, rejectedCandidates;

//makerCorners存储所识别aruco的四个角点的位置第一个点是左上角的角,紧接着右上角、右下角和左下角。

//rejectedCandidates, 返回了所有的marker候选,这一参数可以省略,它仅仅用于debug阶段,或是用于“再次寻找”策略

cv::aruco::DetectorParameters parameters;

//类型的对象 DetectionParameters. 这一对象包含了检测阶段的所有参数。

cv::aruco::Dictionary dictionary = cv::aruco::getPredefinedDictionary(cv::aruco::DICT_6X6_250);

//字典对象,若你的aruco是4X4的便声明cv::aruco::DICT_6X6_250

cv::aruco::detectMarkers(inputImage, dictionary, markerCorners, markerIds, parameters, rejectedCandidates);

//最后一步,使用前面的变量进行检测的函数,把图像中的aruco的信息放进markerCorners,markerIds,rejectedCandidates中

cv::aruco::drawDetectedMarkers(img, corners, ids);

//把检测的信息显示出来

例子代码如下:

#include<iostream>

#include<vector>

#include<opencv2/opencv.hpp>

#include<opencv4/opencv2/aruco.hpp>

#include <opencv2/imgproc/imgproc.hpp>

using namespace std;

using namespace cv;

int main()

{

cv::Mat inputImage_1,inputImage_2;

cv::Mat Image,Image_2;

inputImage_1=cv::imread("220.jpg");

inputImage_2=imread("221.jpg");

inputImage_1.copyTo(Image);

inputImage_2.copyTo(Image_2);

std::vector<int> markerIds,markerIds_2;

// std::vector<std::vector<cv::Point2f>> markerCorners, markerCorners_2;

std::vector<std::vector<cv::Point2f>> markerCorners, rejectedCandidates,markerCorners_2,rejectedCandidates_2;

cv::Ptr<cv::aruco::DetectorParameters> parameters=aruco::DetectorParameters::create();

cv::Ptr<cv::aruco::Dictionary> dictionary = cv::aruco::getPredefinedDictionary(cv::aruco::DICT_4X4_250);

cv::aruco::detectMarkers(Image, dictionary, markerCorners, markerIds, parameters, rejectedCandidates);

cv::aruco::detectMarkers(Image_2, dictionary, markerCorners_2, markerIds_2, parameters,rejectedCandidates_2);

cv::aruco::drawDetectedMarkers(Image_2, markerCorners_2, markerIds_2);

cv::aruco::drawDetectedMarkers(Image, markerCorners, markerIds);

cv::imshow("ou2",Image);

imshow("out_2",Image_2);

Point2f center;

center +=markerCorners[0][0];

center+=markerCorners[0][1];

cout<<center<<endl;

cv::waitKey();

return 0;

}

3)利用aruco进行相机标定

由于aruco可以给我们提供许多图像的信息,因此利用aruco还能进行相机参数的标定。下面给出利用arucoboard的相机标定方法

第一步:生成一步标定板,并进行打印

#include <opencv2/highgui.hpp>

#include <opencv2/aruco.hpp>

#include <opencv2/aruco/dictionary.hpp>

#include <opencv2/aruco/charuco.hpp>

#include <opencv2/core.hpp>

#include <opencv2/imgproc/imgproc.hpp>

#include <opencv2/opencv.hpp>

#include <vector>

#include <iostream>

using namespace std;

using namespace cv;

int main()

{

int markersX = 5;//X轴上标记的数量

int markersY = 5;//Y轴上标记的数量 本例生成5x5的棋盘

int markerLength = 100;//标记的长度,单位是像素

int markerSeparation = 20;//每个标记之间的间隔,单位像素

int dictionaryId = cv::aruco::DICT_6X6_50;//生成标记的字典ID

int margins = markerSeparation;//标记与边界之间的间隔

int borderBits = 1;//标记的边界所占的bit位数

bool showImage = true;

Size imageSize;

imageSize.width = markersX * (markerLength + markerSeparation) - markerSeparation + 2 * margins;

imageSize.height =

markersY * (markerLength + markerSeparation) - markerSeparation + 2 * margins;

//定义图像的大小

Ptr<aruco::Dictionary> dictionary =

aruco::getPredefinedDictionary(aruco::PREDEFINED_DICTIONARY_NAME(dictionaryId));

Ptr<aruco::GridBoard> board = aruco::GridBoard::create(markersX, markersY, float(markerLength),

float(markerSeparation), dictionary);

// show created board

Mat boardImage;

board->draw(imageSize, boardImage, margins, borderBits);

if (showImage) {

imshow("board", boardImage);

imwrite("board2.jpg",boardImage);

waitKey(0);

}

return 0;

}

图像效果:

>

>

之后利用生成的arucoboard就可以进行标定了,基本步骤就是捕捉若干张照片,找出每张照片中的aruco,并记录它们角点,编号的信息,然后进行标定,最主要的标定函数如下:

repError = aruco::calibrateCameraAruco(allCornersConcatenated, allIdsConcatenated,markerCounterPerFrame, board, imgSize, cameraMatrix,distCoeffs, rvecs, tvecs, calibrationFlags);

//allCornersConcatenated为所有aruco角点信息

//allIdsConcatenated为所有aruco的ID信息

//markerCounterPerFrame为角点的数量

//cameraMatrix,distCoeffs为相机内参

//rvecs, tvecs为相机外参

下面为程序代码,运行后把上面得到的aruco板放到需要检测的相机面前(使用不同的姿势),按键盘c为捕捉照片,按键盘d为不选此照片,选择足够照片后,按键盘esc退出计算得出结果

#include <opencv2/highgui.hpp>

#include <opencv2/calib3d.hpp>

#include <opencv2/aruco.hpp>

#include <opencv2/imgproc.hpp>

#include <vector>

#include <iostream>

#include <ctime>

using namespace std;

using namespace cv;

int main()

{

int calibrationFlags = 0;

float aspectRatio = 1;

Ptr<aruco::DetectorParameters> detectorParams = aruco::DetectorParameters::create();

VideoCapture inputVideo;

int waitTime;

if (!video.empty()) {

inputVideo.open(0);

waitTime = 0;

}

Ptr<aruco::Dictionary> dictionary =aruco::getPredefinedDictionary(aruco::DICT_4X4_250);

Ptr<aruco::GridBoard> gridboard =aruco::GridBoard::create(markersX, markersY, markerLength, markerSeparation, dictionary);

Ptr<aruco::Board> board = gridboard.staticCast<aruco::Board>();

vector< vector< vector< Point2f > > > allCorners;

vector< vector< int > > allIds;

Size imgSize;

while (inputVideo.grab()) {

Mat image, imageCopy;

inputVideo.retrieve(image);

vector< int > ids;

vector< vector< Point2f > > corners, rejected;

// detect markers

aruco::detectMarkers(image, dictionary, corners, ids, detectorParams, rejected);

// refind strategy to detect more markers

if (refindStrategy) aruco::refineDetectedMarkers(image, board, corners, ids, rejected);

// draw results

image.copyTo(imageCopy);

if (ids.size() > 0) aruco::drawDetectedMarkers(imageCopy, corners, ids);

putText(imageCopy, "Press 'c' to add current frame",Point(10, 20), FONT_HERSHEY_SIMPLEX, 0.5, Scalar(0, 0, 255), 1);

putText(imageCopy, "Press 'd' to ignore current frame.",Point(10, 40), FONT_HERSHEY_SIMPLEX, 0.5, Scalar(0, 0, 255), 1);

putText(imageCopy, "Press 'ESC' to finish and calibrate",Point(10, 60), FONT_HERSHEY_SIMPLEX, 0.5, Scalar(0, 0, 255), 1);

imshow("out", imageCopy);

char key = (char)waitKey(waitTime);

if (key == 27) break;

if (key == 'c' && ids.size() > 0) {

cout << "Frame captured" << endl;

allCorners.push_back(corners);

allIds.push_back(ids);

imgSize = image.size();

}

}

Mat cameraMatrix, distCoeffs;

vector< Mat > rvecs, tvecs;

double repError;

cameraMatrix = Mat::eye(3, 3, CV_64F);

cameraMatrix.at< double >(0, 0) = 1;

vector< vector< Point2f > > allCornersConcatenated;

vector< int > allIdsConcatenated;

vector< int > markerCounterPerFrame;

markerCounterPerFrame.reserve(allCorners.size());

for (unsigned int i = 0; i < allCorners.size(); i++) {

markerCounterPerFrame.push_back((int)allCorners[i].size());

for (unsigned int j = 0; j < allCorners[i].size(); j++)

{

allCornersConcatenated.push_back(allCorners[i][j]);

allIdsConcatenated.push_back(allIds[i][j]);

}

}

repError = aruco::calibrateCameraAruco(allCornersConcatenated, allIdsConcatenated,markerCounterPerFrame, board, imgSize, cameraMatrix,distCoeffs, rvecs, tvecs, calibrationFlags);

cout<<cameraMatrix<<endl;

cout<<distCoeffs<<endl;

return 0;

}

4)相机pose(姿势)的检测

上文得到相机的参数之后,我们就能够通过aruco的到相机pose

aruco模块提供了一个函数,用来检测所有探测到的Marker的pose。

Mat cameraMatrix, distCoeffs;

vector< Vec3d > rvecs, tvecs;

cv::aruco::estimatePoseSingleMarkers(corners, 0.05, cameraMatrix, distCoeffs, rvecs, tvecs);

//corners 参数是marker的角向量,是由detectMarkers() 函数返回的。

//第二个参数是marker的大小(单位是米或者其它)。注意Pose检测的平移矩阵单位都是相同的。

//cameraMatrix 和 distCoeffs 是需要求解的相机校准参数。

//rvecs 和 tvecs 分别是每个markers角的旋转和平移向量。

//绘制函数

cv::aruco::drawAxis(image, cameraMatrix, distCoeffs, rvec, tvec, 0.1);

//image 是输入/输出图像,坐标将会在这张图像上绘制(通常就是检测marker的那张图像)。

//cameraMatrix 和 distCoeffs 是相机校准参数。

//rvec 和 tvec 是Pose参数,指明了坐标绘制的位置。

//最后一个参数是坐标轴的长度,和tvec单位一样(通常是米)。

这一函数获取的marker坐标系统处在marker重心,Z坐标指向纸面外部,如下图所示。坐标的颜色为,X:红色,Y:绿色,Z:蓝色。

//程序代码

#include<iostream>

#include<vector>

#include<opencv2/opencv.hpp>

#include<opencv4/opencv2/aruco.hpp>

#include<opencv2/videoio.hpp>

using namespace std;

using namespace cv;

int main()

{

cv::VideoCapture inputVideo;

inputVideo.open(0);

cv::Mat cameraMatrix=(Mat_<double>(3,3)<<1000,0,320,0,1000,240,0,0,1);

Mat distCoeffs=(Mat_<double>(5,1)<<0.1,0.01,-0.001,0,0);

cv::Ptr<cv::aruco::Dictionary> dictionary = cv::aruco::getPredefinedDictionary(cv::aruco::DICT_4X4_250);

while (inputVideo.grab()) {

cv::Mat image, imageCopy;

inputVideo.retrieve(image);

image.copyTo(imageCopy);

std::vector<int> ids;

std::vector<std::vector<cv::Point2f>> corners;

cv::aruco::detectMarkers(image, dictionary, corners, ids);

cout<<"1"<<endl;

// if at least one marker detected

if (ids.size() > 0) {

cv::aruco::drawDetectedMarkers(imageCopy, corners, ids);

std::vector<cv::Vec3d> rvecs, tvecs;

cv::aruco::estimatePoseSingleMarkers(corners, 0.05, cameraMatrix, distCoeffs, rvecs, tvecs);

// draw axis for each marker

for(int i=0; i<ids.size(); i++)

cv::aruco::drawAxis(imageCopy, cameraMatrix, distCoeffs, rvecs[i], tvecs[i], 0.1);

}

cout<<"1"<<endl;

cv::imshow("out", imageCopy);

char key = (char) cv::waitKey(1);

if (key == 1)

break;

}

}

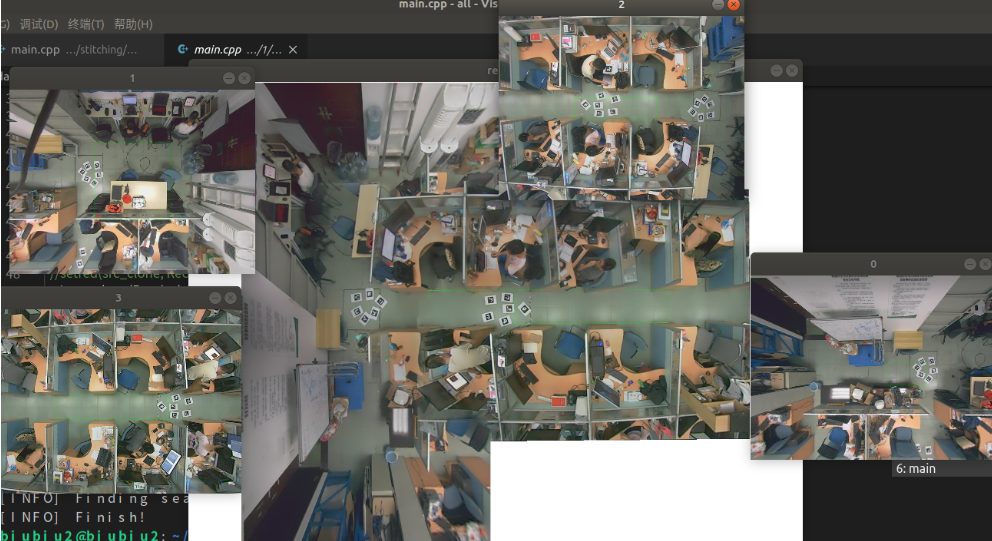

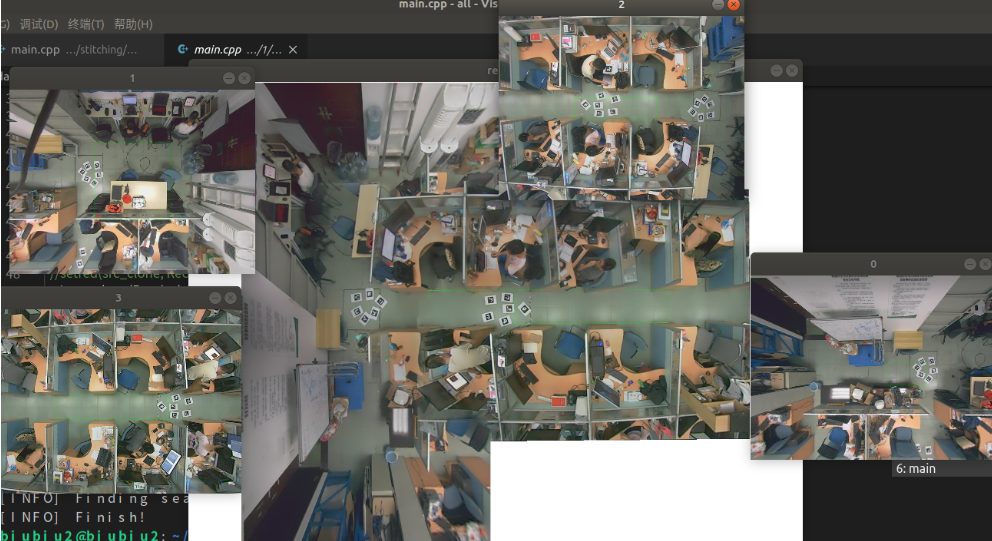

5)我的图像融合的具体实现

经过对图像的基本处理,stitching函数以及aruco的使用的学习之后,我发现能够使用aruco的方法检测每一幅图上面aruco的角点位置以及id信息。通过匹配每张图重合的aruco,来获取图片的位置旋转关系,然后对图像进行融合。

①输入

输入带有Aruco的图片

vector<Mat> srcs;

for(int i = 0; i < 4; i++){

//Mat t = imread(PIC_PATH+pic[i]);

Mat t = imread(PIC_ROOT + string(argv[2]) + "/" + pic[i]);

if(t.empty()){

LOG(string("[Error] no such file: ") + PIC_ROOT + string(argv[2]) + "/" + pic[i]);

exit(-1);

}

resize(t, s_srcs[i], Size(S_WIDTH, S_HEIGHT));

srcs.push_back(t);

}

②识别

识别每张图片上的Aruco,并使用一个结构体存储每张图的信息

fusioner.init(srcs);//②③步的函数

③匹配

匹配每一张图片(检测某两幅图重合的Aruco),获取旋转矩阵R,还有在融合图中的位置

void Fusioner::init(vector<Mat>& srcs){

cv::Ptr<cv::aruco::Dictionary> dictionary = cv::aruco::getPredefinedDictionary(cv::aruco::DICT_4X4_250);

vector<Point> corners(2);

vector<Point> sizes(2);

arucos.clear();

LOG("[INFO] Finding Arucos...");

for (int i = 0; i < srcs.size(); i++){

ImageAruco tmp;

Mat inputImage = srcs[i];

Mat imageCopy = inputImage.clone();

tmp.image = srcs[i];

std::vector<int> ids;

std::vector<std::vector<cv::Point2f>> corners;

cv::aruco::detectMarkers(tmp.image, dictionary, corners, ids);

tmp.ids = ids;

tmp.corners = corners;

if(i == 0) {

tmp.mask.create(tmp.image.size(), CV_8UC1);

tmp.mask.setTo(Scalar::all(255));

tmp.size = tmp.image.size();

tmp.roi = Rect(0,0,tmp.image.cols, tmp.image.rows);

tmp.R = Mat(2,3,CV_32FC1, Scalar(0));

tmp.R.at<float>(0,0) = 1;

tmp.R.at<float>(1,1) = 1;

tmp.scale = 1;

}

arucos.push_back(tmp);

}

srcs.clear();

int count = arucos.size() - 1;

vector<bool> arucos_success(arucos.size());

arucos_success[0] = true;

for(int i = 1; i < arucos.size(); i++){

arucos_success[i] = false;

}

LOG("[INFO] Matching Arucos...");

while(count){

int icount = count;

for(int i = 1; i < arucos.size() ; i++){

if(!arucos_success[i]){

for(int j = 0; j < arucos.size(); j++){

if(arucos_success[j] && match(arucos[j],arucos[i]).size() > 0){

double angle = compute(arucos[j], arucos[i]);

// arucos[i].mask.create(arucos[i].image.size(), CV_8UC1);

// arucos[i].mask.setTo(Scalar::all(255));

Mat tmp;

Mat R = warpRotation(arucos[i].image, tmp, angle);

arucos[i].R = R;

arucos[i].size = tmp.size();

tmp.release();

arucos[i].mask.create(arucos[i].first_size, CV_8UC1);

arucos[i].mask.setTo(Scalar::all(255));

warpRotation(arucos[i].mask, arucos[i].mask, angle);

for(int idx = 0; idx < arucos[i].corners.size(); idx++){

for(int l = 0; l < 4; l++){

change(arucos[i].corners[idx][l], R);

}

}

arucos[i].roi = findRoi(arucos[j], arucos[i]);

arucos_success[i] = true;

count--;

break;

}

}

}

}

if(icount == count){

LOG("[Error] There is an image alone.(No aruco matched with others')");

exit(-1);

}

}

getResult();

}

④准备

准备好stitching函数中blend函数所需的数据

⑤进行融合

void Fusioner::getResult(){

double time1 = static_cast<double>( getTickCount());

LOG("[INFO] Preparing Data...");

vector<Point> corners;

vector<Size> sizes;

vector<UMat> masks;

vector<UMat> uimgs;

vector<Mat> imgs;

for(int i = 0; i < arucos.size(); i++){

corners.push_back(Point(arucos[i].roi.x, arucos[i].roi.y));

sizes.push_back(Size(arucos[i].roi.width, arucos[i].roi.height));

}

Rect origin_dstRoi = resultRoi(corners, sizes);

dstScaleQ = 1;

vector<Mat> arucosMask;

for(int i = 0; i < arucos.size(); i++){

Mat tmp = arucos[i].mask.clone();

arucosMask.push_back(tmp);

}

if(BE_QUICK){

double scaleX = 800.0/origin_dstRoi.width;

double scaleY = 600.0/origin_dstRoi.height;

double fin_scale;

if (scaleX > scaleY){

fin_scale = scaleY;

}else{

fin_scale =scaleX;

}

for(int i = 0; i < arucos.size(); i++){

resize(arucosMask[i], arucosMask[i], Size(), fin_scale, fin_scale);

arucos[i].scale = fin_scale;

}

dstScaleQ = fin_scale;

}

corners.clear();

sizes.clear();

for(int i = 0; i < arucos.size(); i++){

corners.push_back(Point(arucos[i].roi.x * arucos[i].scale, arucos[i].roi.y * arucos[i].scale));

sizes.push_back(Size(arucos[i].roi.width * arucos[i].scale, arucos[i].roi.height * arucos[i].scale));

masks.push_back(UMat(arucosMask[i].size(),CV_8U));

arucosMask[i].copyTo(masks[i]);

// imshow("ad", arucos[i].mask);

Mat rimage;

warpAffine(arucos[i].image, rimage, arucos[i].R, arucos[i].size);

resize(rimage, rimage, Size(), arucos[i].scale, arucos[i].scale);

uimgs.push_back(UMat(rimage.size(), 16));

rimage.copyTo(uimgs[i]);

imgs.push_back(rimage);

}

LOG("[INFO] Compensating exposure...");

Ptr<ExposureCompensator> compensator = ExposureCompensator::createDefault(expos_comp_type);

if (dynamic_cast<GainCompensator*>(compensator.get()))

{

GainCompensator* gcompensator = dynamic_cast<GainCompensator*>(compensator.get());

gcompensator->setNrFeeds(expos_comp_nr_feeds);

}

if (dynamic_cast<ChannelsCompensator*>(compensator.get()))

{

ChannelsCompensator* ccompensator = dynamic_cast<ChannelsCompensator*>(compensator.get());

ccompensator->setNrFeeds(expos_comp_nr_feeds);

}

if (dynamic_cast<BlocksCompensator*>(compensator.get()))

{

BlocksCompensator* bcompensator = dynamic_cast<BlocksCompensator*>(compensator.get());

bcompensator->setNrFeeds(expos_comp_nr_feeds);

bcompensator->setNrGainsFilteringIterations(expos_comp_nr_filtering);

bcompensator->setBlockSize(expos_comp_block_size, expos_comp_block_size);

}

compensator->feed(corners, uimgs, masks);

LOG("[INFO] Finding seams...");

Ptr<SeamFinder> seam_finder;

{

if (seam_find_type == "no")

seam_finder = makePtr<detail::NoSeamFinder>();

else

{

seam_finder = makePtr<detail::GraphCutSeamFinder>(GraphCutSeamFinderBase::COST_COLOR);

}

}

vector<UMat> images_warped_f(arucos.size());

for (int i = 0; i < arucos.size(); ++i)

uimgs[i].convertTo(images_warped_f[i], CV_32F);

seam_finder->find(images_warped_f, corners, masks);

images_warped_f.clear();

Ptr<Blender> blender;

Rect dstRoi = resultRoi(corners, sizes);

for(int i = 0; i < arucos.size(); i++){

Mat img = imgs[i];

Mat mask = arucosMask[i];

Mat img_s;

Mat dilated_mask, seam_mask;

compensator->apply(i, corners[i], img, mask);

img.convertTo(img_s, CV_16S);

dilate(masks[i], dilated_mask, Mat());

resize(dilated_mask, seam_mask, mask.size(), 0, 0, INTER_LINEAR_EXACT);

mask = seam_mask & mask;

if (!blender)

{

blender = Blender::createDefault(Blender::MULTI_BAND, try_cuda);

Size dst_sz = resultRoi(corners, sizes).size();

float blend_width = sqrt(static_cast<float>(dst_sz.area())) * blend_strength / 100.f;

if (blend_width < 1.f)

blender = Blender::createDefault(Blender::NO, try_cuda);

else

{

MultiBandBlender* mb = dynamic_cast<MultiBandBlender*>(blender.get());

mb->setNumBands(static_cast<int>(ceil(log(blend_width)/log(2.)) - 1.));

}

blender->prepare(corners, sizes);

}

blender->feed(img_s, mask, corners[i]);

arucos[i].T = addDisplacement(arucos[i].R, arucos[i].roi.x - origin_dstRoi.x, arucos[i].roi.y - origin_dstRoi.y);

invert(toSquare(arucos[i].T), arucos[i].iT);

}

{

Mat result, result_mask;

blender->blend(result, result_mask);

bitwise_not(result_mask,result_mask);

result_mask.convertTo(result_mask,CV_8UC1);

result.convertTo(result,CV_8UC3);

//imshow("rw",result_mask);

for(int i = 0; i < result.rows; i++){

for(int j = 0; j < result.cols; j++){

Vec3b colors = result.at<Vec3b>(i,j);

colors[0] += result_mask.ptr<uchar>(i)[j];

colors[1] += result_mask.ptr<uchar>(i)[j];

colors[2] += result_mask.ptr<uchar>(i)[j];

result.at<Vec3b>(i,j) = colors;

}

}

processDst(result);

resultImage = result.clone();

}

corners.clear();

sizes.clear();

masks.clear();

uimgs.clear();

for(int i = 0; i < arucos.size(); i++){

arucos[i].image.release();

}

LOG("[INFO] Finish! ");

double time2 = (static_cast<double>( getTickCount()) - time1)/getTickFrequency();

}

⑥具体函数的实现

每张两张图片aruco的匹配

vector<pair<int, int>> Fusioner::match(ImageAruco src1, ImageAruco src2)

{

vector<pair<int, int>> match_idx;

for (int i = 0; i < src1.ids.size(); i++)

{

int id = src2.findIds(src1.ids[i]);

if (id == -1)

{

continue;

}

match_idx.push_back(pair<int, int>(i, id));

}

return match_idx;

}

旋转矩阵和变换大小的获取:

通过对两幅图中相同aruco的大小来获取从B图换到A图的变换大小

由于两个点可以确定一条直线的角度,因此获取两幅图中多个aruco的中心点,比较两幅图中每两条线的角度,然后取平均值,就能获得旋转角度

double Fusioner::compute(ImageAruco& src0, ImageAruco& src1){

vector<pair<int, int>> idxMatch = match(src0,src1);

double angleSum = 0;

double scale = 0;

if (idxMatch.size() >= 2){

for(int i = 1; i < idxMatch.size(); i++){

int id_0 = idxMatch[i].first;

int id_00 = idxMatch[i-1].first;

int id_1 = idxMatch[i].second;

int id_10 = idxMatch[i-1].second;

Point2f v0,v1;

{

Point p0 = src0.corners[id_0][0];

Point p1 = src0.corners[id_0][2];

Point p2 = src0.corners[id_00][0];

Point p3 = src0.corners[id_00][2];

v0.x = ((p1.x + p0.x) - (p2.x + p3.x))*0.5;

v0.y = ((p1.y + p0.y) - (p2.y + p3.y))*0.5;

//line(src0.image,p0,p2,Scalar(0,0,255),3);

}

{

Point p0 = src1.corners[id_1][0];

Point p1 = src1.corners[id_1][2];

Point p2 = src1.corners[id_10][0];

Point p3 = src1.corners[id_10][2];

v1.x = ((p1.x + p0.x) - (p2.x + p3.x))*0.5;

v1.y = ((p1.y + p0.y) - (p2.y + p3.y))*0.5;

//line(src1.image,p0,p2,Scalar(0,0,255),3);

}

double label = asin((v0.x*v1.y-v0.y*v1.x) / (sqrt(pow(v0.x,2)+pow(v0.y,2))*sqrt(pow(v1.x,2)+pow(v1.y,2))) );

double angle = 360*acos(((v0.x*v1.x)+(v0.y*v1.y)) / (sqrt(pow(v0.x,2)+pow(v0.y,2))*sqrt(pow(v1.x,2)+pow(v1.y,2))))/(2*CV_PI);

angle *= label < 0 ? -1 : 1;

angleSum += angle;

scale += sqrt(pow(v0.x,2)+pow(v0.y,2))/sqrt(pow(v1.x,2)+pow(v1.y,2));

}

angleSum /= idxMatch.size()-1;

//scale /= idxMatch.size() -1;

scale = 1;

}else{

for(int i = 0; i < idxMatch.size(); i++){

int id_0 = idxMatch[i].first;

int id_1 = idxMatch[i].second;

Point2f v0,v1;

{

Point p0 = src0.corners[id_0][0];

Point p2 = src0.corners[id_0][2];

v0.x = p2.x-p0.x;

v0.y = p2.y-p0.y;

//line(src0.image,p0,p2,Scalar(0,0,255),3);

}

{

Point p0 = src1.corners[id_1][0];

Point p2 = src1.corners[id_1][2];

v1.x = p2.x-p0.x;

v1.y = p2.y-p0.y;

//line(src1.image,p0,p2,Scalar(0,0,255),3);

}

double label = asin((v0.x*v1.y-v0.y*v1.x) / (sqrt(pow(v0.x,2)+pow(v0.y,2))*sqrt(pow(v1.x,2)+pow(v1.y,2))) );

double angle = 360*acos(((v0.x*v1.x)+(v0.y*v1.y)) / (sqrt(pow(v0.x,2)+pow(v0.y,2))*sqrt(pow(v1.x,2)+pow(v1.y,2))))/(2*CV_PI);

angle *= label < 0 ? -1 : 1;

angleSum += angle;

scale += sqrt(pow(v0.x,2)+pow(v0.y,2))/sqrt(pow(v1.x,2)+pow(v1.y,2));

}

angleSum /= idxMatch.size();

//scale /= idxMatch.size();

scale = 1;

}

Roi的获取(每幅图左上角在融合图的坐标的获取)

第一幅图的坐标默认在(0,0),后面图片通过对相同aruco的相对位置来计算Roi

Rect Fusioner::findRoi(ImageAruco first, ImageAruco second){

vector<pair<int, int>> idxMatch = match(first, second);

double dx = 0, dy = 0;

int x0 = first.roi.x;

int y0 = first.roi.y;

for(int i = 0; i < idxMatch.size(); i++){

int id_0 = idxMatch[i].first;

int id_1 = idxMatch[i].second;

for(int j = 0; j < 4; j++){

dx += first.corners[id_0][j].x - second.corners[id_1][j].x;

dy += first.corners[id_0][j].y - second.corners[id_1][j].y;

}

}

dx /= (idxMatch.size()*4);

dy /= (idxMatch.size()*4);

return Rect(x0 + dx, y0 + dy, second.size.width, second.size.height);

}

通过旋转角度获取旋转矩阵(getRotationMatrix2D()函数的使用)

Mat warpRotation(Mat src, Mat &dst, double degree){

Point2f center;

center.x = float(src.cols / 2.0 - 0.5);

center.y = float(src.rows / 2.0 - 0.5);

double angle = degree * CV_PI / 180.;

double a = sin(angle), b = cos(angle);

int width = src.cols;

int height = src.rows;

int width_rotate = int(height * fabs(a) + width * fabs(b));

int height_rotate = int(width * fabs(a) + height * fabs(b));

Mat M1 = getRotationMatrix2D(center, degree, 1.0);

Point2f srcPoints1[3];

Point2f dstPoints1[3];

srcPoints1[0] = Point2i(0, 0);

srcPoints1[1] = Point2i(0, src.rows);

srcPoints1[2] = Point2i(src.cols, 0);

dstPoints1[0] = Point2i((width_rotate - width)/2 , (height_rotate - height)/2);

dstPoints1[1] = Point2i((width_rotate - width)/2 , src.rows + (height_rotate - height)/2);

dstPoints1[2] = Point2i(src.cols + (width_rotate - width)/2, (height_rotate - height)/2);

Mat M2 = getAffineTransform(srcPoints1, dstPoints1);

M1.at<double>(0, 2) = M1.at<double>(0, 2) + M2.at<double>(0, 2);

M1.at<double>(1, 2) = M1.at<double>(1, 2) + M2.at<double>(1, 2);

//Mat src2(Size(width_rotate, height_rotate), CV_8UC1, Scalar(0));

Mat res5(width_rotate, height_rotate, CV_8UC1, Scalar(0));

warpAffine(src, res5, M1, Size(width_rotate, height_rotate));

dst = res5.clone();

return M1;

}

⑦坐标转换

原图,融合图的坐标转换

通过前面所得的变换矩阵以及Roi能够获得图片的坐标转换关系

反过来便是逆向的转换

Point2f Fusioner::getDstPos(Point2f src, int idx, bool isInverse){

if(isInverse){

change(src, idstR);

src = Point2f(1.0 * src.x / (dstScale * dstScaleQ), 1.0 * src.y / (dstScale * dstScaleQ));

// src.x /= arucos[idx].scale;

// src.y /= arucos[idx].scale;

change(src, arucos[idx].iT);

src = getOrigin(src.x, src.y, true);

return src;

}

else{

src = getOrigin(src.x, src.y);

change(src, arucos[idx].T);

// src.x *= arucos[idx].scale;

// src.y *= arucos[idx].scale;

src = Point2f(src.x * (dstScale * dstScaleQ), src.y * (dstScale * dstScaleQ));

change(src, dstR);

LOG(src);

return src;

}

}

⑧压缩融合

由于stitching上的blend函数运行起来比较耗时间,因此在进行blend操作之前对图像进行resize,使得融合的时间变快,注意在blend之前还是使用原图来获取转换的信息

Rect origin_dstRoi = resultRoi(corners, sizes);

dstScaleQ = 1;

vector<Mat> arucosMask;

for(int i = 0; i < arucos.size(); i++){

Mat tmp = arucos[i].mask.clone();

arucosMask.push_back(tmp);

}

if(BE_QUICK){

double scaleX = 800.0/origin_dstRoi.width;

double scaleY = 600.0/origin_dstRoi.height;

double fin_scale;

if (scaleX > scaleY){

fin_scale = scaleY;

}else{

fin_scale =scaleX;

}

for(int i = 0; i < arucos.size(); i++){

resize(arucosMask[i], arucosMask[i], Size(), fin_scale, fin_scale);

arucos[i].scale = fin_scale;

}

dstScaleQ = fin_scale;

}

corners.clear();

sizes.clear();

for(int i = 0; i < arucos.size(); i++){

corners.push_back(Point(arucos[i].roi.x * arucos[i].scale, arucos[i].roi.y * arucos[i].scale));

sizes.push_back(Size(arucos[i].roi.width * arucos[i].scale, arucos[i].roi.height * arucos[i].scale));

masks.push_back(UMat(arucosMask[i].size(),CV_8U));

arucosMask[i].copyTo(masks[i]);

// imshow("ad", arucos[i].mask);

Mat rimage;

warpAffine(arucos[i].image, rimage, arucos[i].R, arucos[i].size);

resize(rimage, rimage, Size(), arucos[i].scale, arucos[i].scale);

uimgs.push_back(UMat(rimage.size(), 16));

rimage.copyTo(uimgs[i]);

imgs.push_back(rimage);

}

最终的效果:

3.实验总结

总的来说,进入实验室的这段时间以来,虽然在做这个项目的过程中遇到不少的困难,也掉了不少的坑,但我还是学习到了许多关于opencv图像处理的相关知识。